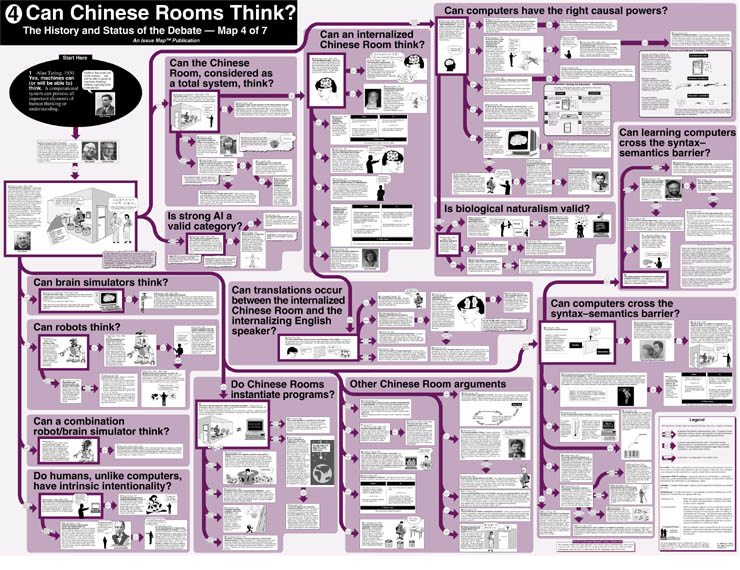

Can Chinese Rooms think? [4]

The Chinese Room Argument was proposed by John Searle in response to the claim that physical symbol systems can think. Click on the arrow to the right, and then the arrow on the cross-link that opens to view the argument in context on Map [3].

[[

A full-sized version of the original can be ordered here.

The questions explored on

Map 4 – Can Chinese Rooms think? are:

- Do humans, unlike computers, have intrinsic intentionality?

- Is biological naturalism valid?

- Can computers cross the syntaxsemantics barrier?

- Can learning machines cross the syntaxsemantics barrier?

- Can brain simulators think?

- Can robots think?

- Can a combination robot/brain simulator think?

- Can the Chinese Room, considered as a total system, think?

- Do Chinese Rooms instantiate programs?

- Can an internalized Chinese Room think?

- Can translations occur between the internalized Chinese Room, and the internalizing English speaker?

- Can computers have the right causal powers?

- Is strong AI a valid category?

- Other Chinese Room arguments

]]

The Chinese Room Argument was proposed by John Searle (1980 & 1990):

Imagine that a man who does not speak Chinese sits in a room and is passed Chinese symbols through a slot in the door. To him, the symbols are just so many squiggles and squoggles. But he reads an English-language rule book that tells him how to manipulate the symbols and which ones to send back out.

To the Chinese speakers outside, whoever (or whatever) is in the room is carrying on an intelligent conversation. But the man in the Chinese room does not understand Chinese; he is merely manipulating symbols according to a rulebook. He is instantiating a formal program, which passes the Turing test for intelligence, but nevertheless he does not understand Chinese. This shows that the instantiation of a formal program is not enough to produce semantic understanding or intentionality.

Intentionality: The property (in reference to a mental state) of being directed at a state of affairs in the world. For example, the belief that Sally is in front of me is directed at a person, Sally, in the world.

Although there are important and subtle distinctions in the definitions of the words, intentionality is sometimes taken in this debate to be synonymous with representation, understanding, consciousness, meaning, and semantics.

Note: for more on Turing tests, see Map 2. For more on formal programmes and instantiation, see the "Is the brain a computer arguments?" on Map 1, the "Can functional states generate consciousness?" arguments on Map 6, and "Formal systems: an overview" on Map 7.