Stochastic gradient descent is a gradient descent optimization method for minimizing an objective function that is written as a sum of differentiable functions.

Contents

[hide] - 1 Background

- 2 Iterative method

- 3 Example

- 4 Applications

- 5 References

- 6 External links

Background[edit]

Main article:

M-estimationBoth statistical estimation and machine learning consider the problem of minimizing an objective function that has the form of a sum:

where the parameter  is to be estimated and where typically each summand function

is to be estimated and where typically each summand function  is associated with the

is associated with the  -th observation in the data set (used for training).

-th observation in the data set (used for training).

In classical statistics, sum-minimization problems arise in least squares and in maximum-likelihood estimation (for independent observations). The general class of estimators that arise as minimizers of sums are called M-estimators. However, in statistics, it has been long recognized that requiring even local minimization is too restrictive for some problems of maximum-likelihood estimation, as shown for example by Thomas Ferguson's example.[1] Therefore, contemporary statistical theorists often considerstationary points of the likelihood function (or zeros of its derivative, the score function, and other estimating equations).

The sum-minimization problem also arises for empirical risk minimization: In this case,  is the value of loss function at

is the value of loss function at  -th example, and

-th example, and  is the empirical risk.

is the empirical risk.

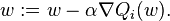

When used to minimize the above function, a standard (or "batch") gradient descent method would perform the following iterations :

where  is a step size (sometimes called the learning rate in machine learning).

is a step size (sometimes called the learning rate in machine learning).

In many cases, the summand functions have a simple form that enables inexpensive evaluations of the sum-function and the sum gradient. For example, in statistics, one-parameter exponential families allow economical function-evaluations and gradient-evaluations.

However, in other cases, evaluating the sum-gradient may require expensive evaluations of the gradients from all summand functions. When the training set is enormous and no simple formulas exist, evaluating the sums of gradients becomes very expensive, because evaluating the gradient requires evaluating all the summand functions' gradients. To economize on the computational cost at every iteration, stochastic gradient descent samples a subset of summand functions at every step. This is very effective in the case of large-scale machine learning problems.[2]

Iterative method[edit]

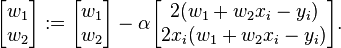

Fluctuations in the total objective function as gradient steps with respect to mini-batches are taken.

In stochastic (or "on-line") gradient descent, the true gradient of  is approximated by a gradient at a single example:

is approximated by a gradient at a single example:

As the algorithm sweeps through the training set, it performs the above update for each training example. Several passes over the training set are made until the algorithm converges. Typical implementations may also randomly shuffle training examples at each pass and use an adaptive learning rate.

In pseudocode, stochastic gradient descent with shuffling of training set at each pass can be presented as follows:

There is a compromise between the two forms, which is often called "mini-batches", where the true gradient is approximated by a sum over a small number of training examples.

The convergence of stochastic gradient descent has been analyzed using the theories of convex minimization and of stochastic approximation. Briefly, when the learning rates  decrease with an appropriate rate, and subject to relatively mild assumptions, stochastic gradient descent converges almost surely to a global minimum when the objective function is convex or pseudoconvex, and otherwise converges almost surely to a local minimum.[3] [4] This is in fact a consequence of the Robbins-Siegmund theorem.[5]

decrease with an appropriate rate, and subject to relatively mild assumptions, stochastic gradient descent converges almost surely to a global minimum when the objective function is convex or pseudoconvex, and otherwise converges almost surely to a local minimum.[3] [4] This is in fact a consequence of the Robbins-Siegmund theorem.[5]

Example[edit]

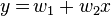

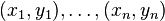

Let's suppose we want to fit a straight line  to a training set of two-dimensional points

to a training set of two-dimensional points  using least squares. The objective function to be minimized is:

using least squares. The objective function to be minimized is:

The last line in the above pseudocode for this specific problem will become:

Applications[edit]

Stochastic gradient descent is a popular algorithm for training a wide range of models in machine learning, including (linear) support vector machines, logistic regression (see, e.g., Vowpal Wabbit) and graphical models.[6] It competes with the L-BFGSalgorithm, which is also widely used. SGD has been used since at least 1960 for training linear regression models, originally under the name ADALINE.[7]

When combined with the backpropagation algorithm, it is the de facto standard algorithm for training (shallow) artificial neural networks.

Another popular stochastic gradient descent algorithm is the least mean squares (LMS) adaptive filter.

References[edit]

- Jump up^ Ferguson, Thomas S. (1982). "An inconsistent maximum likelihood estimate". Journal of the American Statistical Association 77 (380): 831–834. doi:10.1080/01621459.1982.10477894. JSTOR 2287314.

- Jump up^ Bottou, Léon; Bousquet, Olivier (2008). "The Tradeoffs of Large Scale Learning". Advances in Neural Information Processing Systems 20. pp. 161–168

- Jump up^ Bottou, Léon (1998). "Online Algorithms and Stochastic Approximations". Online Learning and Neural Networks. Cambridge University Press. ISBN 978-0-521-65263-6

- Jump up^ Kiwiel, Krzysztof C. (2001). "Convergence and efficiency of subgradient methods for quasiconvex minimization". Mathematical Programming (Series A) 90 (1) (Berlin, Heidelberg: Springer). pp. 1–25. doi:10.1007/PL00011414. ISSN 0025-5610. MR 1819784.

- Jump up^ Robbins, Herbert; Siegmund, David O. (1971). "A convergence theorem for non negative almost supermartingales and some applications". In Rustagi, Jagdish S. Optimizing Methods in Statistics. Academic Press

- Jump up^ Jenny Rose Finkel, Alex Kleeman, Christopher D. Manning (2008). Efficient, Feature-based, Conditional Random Field Parsing. Proc. Annual Meeting of the ACL.

- Jump up^ Avi Pfeffer. "CS181 Lecture 5 — Perceptrons". Harvard University.

- Kiwiel, Krzysztof C. (2003). "Convergence of approximate and incremental subgradient methods for convex optimization". SIAM Journal of Optimization 14 (3). pp. 807–840. doi:10.1137/S1052623400376366. MR 2085944. (Extensive list of references)

- Pattern Classification by Richard O. Duda, Peter E. Hart, David G. Stork, ISBN 0-471-05669-3, 2000

- Introduction to Stochastic Search and Optimization by James C. Spall, ISBN 0-471-33052-3, 2003

External links[edit]